A data network built for

scale in the real world

Homes & everyday life

We've partnered with home service professionals across India—cooks, cleaners, and household help—so we can capture a broad range of day-to-day tasks across thousands of unique home layouts.

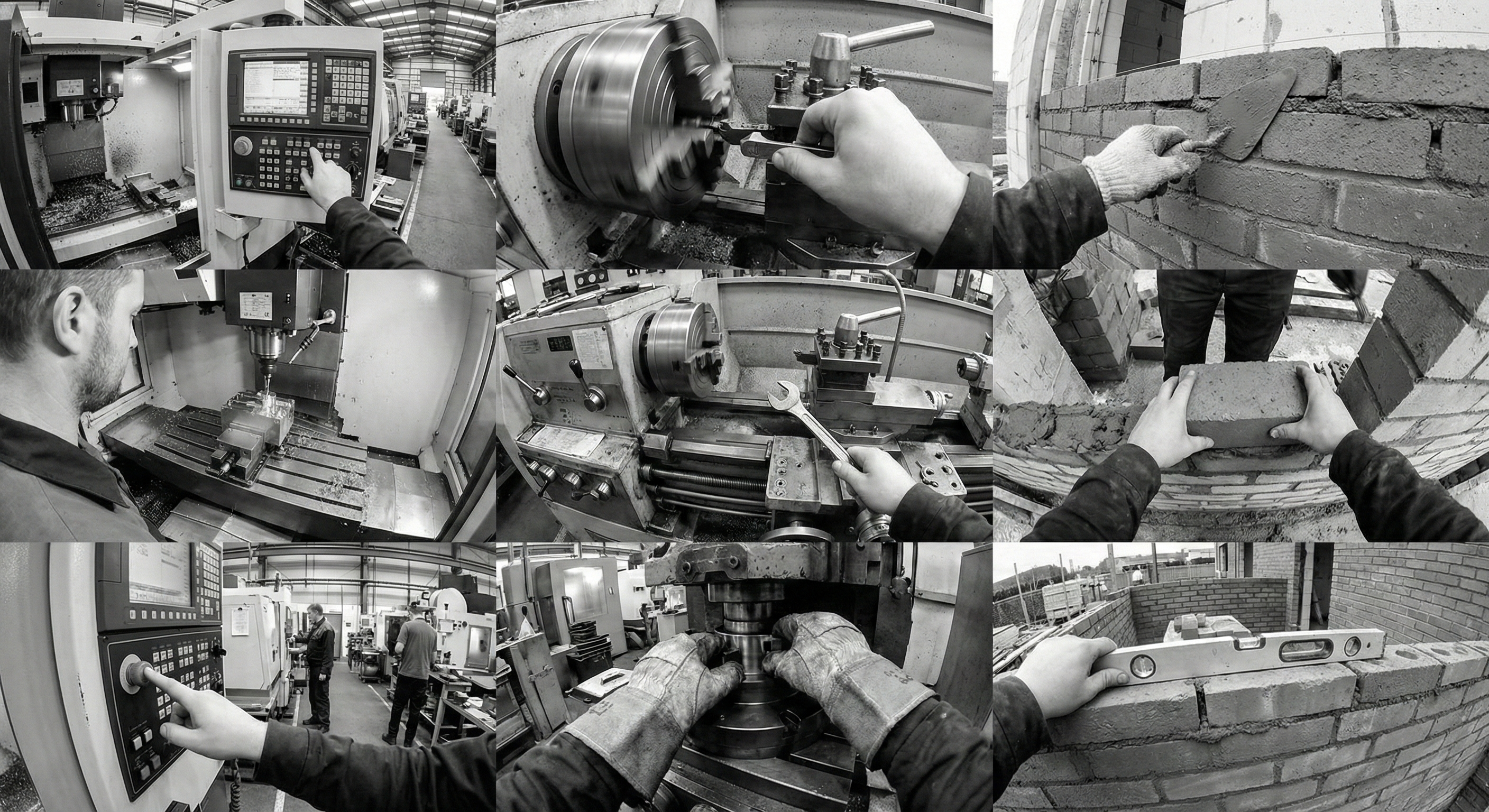

Factories & industrial sites

We also partner with factories, warehouses, and construction operators to capture industrial workflows—where robots need to perform reliably in complex, changing environments.